Exploring Offline Large Language Models: Implementation and Customization Strategies

Navigating the realm of Large Language Models (LLMs) has revolutionized how we interact with Natural Language Processing (NLP), offering advancements in various linguistic tasks like text generation, sentiment analysis, and translation. However, the reliance on internet connectivity can sometimes pose challenges. To combat this, developers are actively delving into the world of offline LLMs. So, let’s dive into the world of Offline Large Language Models: Implementation and Customization Strategies to uncover how you can harness the power of these models without internet constraints.

When it comes to exploring Offline Large Language Models, multiple open-source projects such as GPT4All pave the way for organizations to deploy pre-trained language models locally. By utilizing solutions like GPT4All, companies can enhance data privacy, reduce operational costs efficiently while maintaining full control over their data. Customization plays a crucial role here; fine-tuning models according to specific business needs elevates performance levels to cater to a wide array of applications effectively.

Did you know that open-source repositories such as Kanaries and LangChain provide developers with tools essential for efficient work with Offline Large Language Models? These repositories offer deep insights into extensive training corpora inclusive of text, code, and image datasets aiding developers in deriving meaningful conclusions from their data sans internet dependency.

The tech-savvy tools Kanaries and LangChain come with their unique strengths — while Kanaries excels in data visualization and exploratory analysis through its model Rath, LangChain focuses on Natural Language Processing capabilities providing access to pre-trained LLMs for text generation and question-answering tasks offline. As both resources rely heavily on diverse datasets for robust training efficiency, accessing model weights directly from their respective GitHub repositories ensures seamless workflows during customization endeavors.

By capitalizing on versatile repositories like Kanaries and LangChain for offline use cases demonstrates various perks like offline accessibility yielding potent avenues for developers without requiring continual internet support and ease of use catering even to proficiency levels varying from novice to experts within machine learning domains.

Let’s take our journey towards mastering Offline Large Language Models further by exploring additional useful resources such as Gorilla offering vast utility in leveraging trained models fluently generating text outputs or executing question-answering tasks offline. The detailed architecture implemented by Gorilla emphasizing retriever-aware training exhibits significant advantages in comprehending instructions effectively resulting in enriched system pragmatics leading towards reduced operational errors significantly enhancing performance metrics extensively together with maximizing flexibility and control over infrastructural facets seamlessly. Shall we proceed further down this fascinating avenue of Offline Large Language Models’ exploration? Let’s uncover more invaluable insights together!

Table of Contents

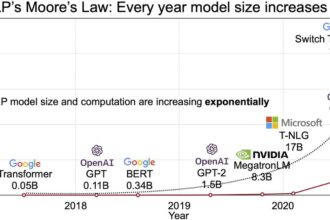

ToggleAn End-to-End Guide to Different Types of Offline Language Models

In delving into the realm of Offline Large Language Models, one key aspect to consider is how to use these models effectively offline. running an LLM locally requires access to an open-source LLM that can be modified freely and run on your device with acceptable latency. An understanding of what Large Language Models (LLMs) are and how they work is pivotal; these deep learning models are pre-trained on vast datasets, employing transformers with encoder-decoder structures featuring self-attention capabilities. To train an LLM successfully with custom data, several steps need to be followed: identifying relevant data sources in sufficient volume, ensuring high quality, and compatibility with the desired LLM format.

Diving deeper into specific tools available for leveraging offline language models, Gorilla stands out as a repository offering trained LLMs offline across various data types like text, code, and images. This resource equips developers with a suite of utilities catering to text generation and question-answering tasks while granting them flexibility and control over their infrastructure and data when used effectively.

Another notable project in the landscape of Offline Large Language Models is Megatron-LM by NVIDIA. This open-source endeavor offers a scalable and efficient approach for training expansive language models proficiently. Through its distributed training capabilities, Megatron-LM enables organizations to craft tailored models for specific domains or applications securely offline. This resource’s availability without internet dependency fortifies security measures within data-sensitive environments, ensuring confidentiality remains paramount.

Overall, when venturing into the terrain of Offline Large Language Models usage – whether through repositories like Gorilla or projects like Megatron-LM – organizations can elevate their operational efficiency by harnessing these tools for varied tasks such as text generation, question-answering, or training domain-specific language models proficiently without internet constraints.

Unlocking Privacy, Control, and Cost Reduction with GPT4All

To unlock privacy, control, and cost reduction benefits seamlessly with GPT4All, organizations can tap into its innovative features for deploying pre-trained language models locally. This empowers companies to operate without the need for an internet connection while enhancing data privacy, reducing operational costs, and maintaining complete control over their information. The flexibility offered by GPT4All allows for customizing models to suit specific business needs, making it a valuable tool across various applications.

Running a Local Large Language Model (LLM) like ChatGPT through GPT4All on your PC or Mac provides a private and efficient way to harness AI capabilities without external dependencies. One remarkable aspect of GPT4All is its compatibility across different operating systems like Windows, macOS, and Ubuntu. By following simple steps such as downloading the app from the official website and selecting a model based on your system’s resources, you can create a personalized environment for text generation or question-answering tasks locally.

When incorporating GPT4All into your codebase for Python projects or any other suitable environment, downloading the required LLM model is essential for seamless performance. With multiple free LLM models available via GPT4All ranging in size from 3 to 10 GB each, users can choose models that align with their specific needs effortlessly. This asset makes it easier for developers to access a wide array of model options based on their project requirements efficiently.

By integrating GPT4All into your workflow effectively, businesses can ensure enhanced data security due to local deployment while also benefiting from tailored precision through specialized model ecosystems designed for diverse use cases. The offline support provided by GPT4All coupled with its user-friendly interface makes it accessible even to individuals or enterprises without advanced coding knowledge. Embrace the power of GPT4All today to elevate your data management capabilities with efficiency and ease!

How to Use LLMs Offline on Different Platforms

To use Large Language Models (LLMs) offline on various platforms like Windows, Linux, and macOS, you can leverage different frameworks and repositories tailored for local deployment. One prominent option is LM Studio, which offers a graphical interface compatible with multiple operating systems and allows access to a vast database of LLMs from platforms like HuggingFace at no cost. By utilizing LM Studio, users can efficiently run LLMs locally without the need for an internet connection and explore a range of functionalities such as text generation and question-answering tasks seamlessly.

If you’re looking to employ Offline Large Language Models effectively on your computer without relying on external services, several tools are available for running LLMs locally across different platforms. Frameworks like GPT4All, LM Studio, Jan, llama.cpp, llamafile, Ollama enable individuals to set up and operate LLMs effortlessly while ensuring privacy and control over their data. These user-friendly frameworks empower users to engage with LLM capabilities in an independent manner offline or online based on their preferences.

Furthermore, some applications like ChatGPT can be utilized offline by installing specific tools that facilitate local execution. For instance, Offline ChatGPT allows users to interact with a smart chatbot even in offline mode without requiring continuous internet connectivity. This feature not only enhances accessibility but also ensures seamless communication with AI models irrespective of online limitations.

In the pursuit of running Large Language Models locally on your device efficiently while maintaining data security and minimizing external dependencies; leveraging repositories like Gorilla becomes crucial. Gorilla provides access to offline-trained LLMs encompassing various datasets such as text, code, and images along with robust tools for optimizing LLM functionalities effectively including text generation and question-answering tasks even in offline environments successfully.

In essence, exploring the landscape of Offline Large Language Models’ usage across diverse platforms opens up avenues for enhanced privacy benefits by harnessing local resources effectively through intuitive frameworks like LM Studio or GPT4All tailored specifically toward seamless deployments without perpetual reliance on web services or online connections.

Top Open-Source Projects for Offline LLMs

Exploring Offline Large Language Models opens up a plethora of possibilities for harnessing the power of LLMs without internet constraints. One notable repository that caters to this need is Gorilla, offering offline LLMs trained on diverse datasets like text, code, and images. By utilizing Gorilla’s tools effectively, such as text generation and question-answering capabilities, users can unlock enhanced control and flexibility over their data while ensuring data privacy.

Running an LLM locally involves leveraging open-source LLMs that can be freely modified and shared to tailor them to specific needs. To achieve this, sufficient inference capabilities are required for running the LLM on your device with acceptable latency. Local deployment of LLMs presents advantages in terms of enhanced privacy and security by keeping data local, free from external influences or biases. Moreover, eliminating the need for an internet connection minimizes exposure to external threats.

To train a custom LLM for personalized tasks demands meticulous steps starting from data preparation by selecting task-specific documents followed by generating synthetic data using frameworks like Hugging Face for dataset creation. Subsequently, fine-tuning an embedding model with the generated synthetic data ensures optimal performance tailored to unique requirements.

- Utilize open-source projects like GPT4All to deploy pre-trained language models locally for offline use, enhancing data privacy and reducing operational costs.

- Customize models according to specific business needs to elevate performance levels and cater effectively to a wide array of applications.

- Explore repositories like Kanaries and LangChain for tools essential in working efficiently with Offline Large Language Models, offering deep insights into extensive training corpora without internet dependency.

- Access model weights directly from GitHub repositories of resources like Kanaries and LangChain for seamless workflows during customization endeavors, enabling offline accessibility and potent avenues for developers without continual internet support.