Table of Contents

ToggleIs LLM a Type of Generative Adversarial Networks?

No, a Large Language Model (LLM) is not a type of Generative Adversarial Network (GAN). Despite both being generative models in machine learning, LLMs and GANs differ fundamentally in structure, training, and application. They serve distinct roles within AI.

Understanding Generative Adversarial Networks (GANs)

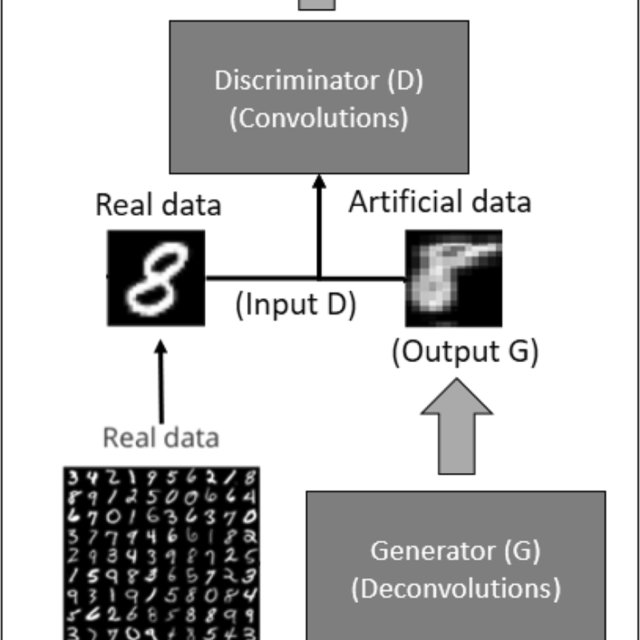

GANs originated in 2014, introduced by Ian Goodfellow. The model comprises two neural networks: a generator and a discriminator. These networks operate in competition, forming a game-like system.

- Generator: Creates synthetic data, such as images resembling real samples.

- Discriminator: Attempts to distinguish real data from generator-produced fakes.

The training process is adversarial. The generator improves to fool the discriminator, while the discriminator gets better at detection. This tug-of-war leads to realistic data creation, mostly in non-sequential domains like images or video.

Common GAN applications include image synthesis, video generation, and data augmentation to improve machine learning datasets.

Large Language Models (LLMs) Explained

LLMs specialize in language tasks. They use transformer architectures such as GPT and BERT, tailored for sequential text data. Their design focuses on understanding context and meaning in human language.

- They predict the next word in a sequence based on previous words.

- They handle summarization, translation, question answering, and sentiment analysis.

Training an LLM involves backpropagation to reduce prediction error across huge text corpora. Unlike GANs, there is no adversarial component in LLM training. Instead, the model learns patterns and syntax through statistical optimization.

Key Differences Between LLMs and GANs

| Aspect | LLM (Large Language Model) | GAN (Generative Adversarial Network) |

|---|---|---|

| Architecture | Single transformer-based network designed for sequential text data | Two networks (generator and discriminator) in adversarial setup |

| Training | Backpropagation minimizing prediction error (non-adversarial) | Adversarial process where generator and discriminator compete |

| Primary Output | Sequences of text with syntactic and semantic coherence | High-fidelity synthetic data (often images or videos) |

| Main Focus | Language understanding and generation | Data synthesis and augmentation |

Hybrid Approaches and Interactions

While LLMs are not GANs, research explores combinations. Some use GANs to improve or augment text data generated by LLMs, or alternatively, GANs can generate synthetic datasets to enhance LLM training. Other work investigates using GAN-like adversarial techniques to test LLM robustness.

These intersections do not redefine LLMs as GANs. Instead, they represent complementary methods harnessing strengths from both paradigms.

Summary

- LLMs and GANs share the generative label but differ in structure and training.

- LLMs excel at text generation using transformer models without adversarial training.

- GANs rely on two competing networks to produce realistic non-text data.

- Hybrid models combine elements of both, expanding generative AI capabilities.

Is LLM a Type of Generative Adversarial Networks?

No, a Large Language Model (LLM) is not a type of Generative Adversarial Network (GAN). While both LLMs and GANs are generative models in machine learning, they belong to distinct architectural families and use fundamentally different training methodologies. This post dives into why that is and explores the unique strengths and workings of each.

Understanding the Basics: What Is an LLM?

Imagine handing a machine a massive library and asking it to predict sentences or even whole paragraphs — that’s an LLM’s game. Large Language Models, like GPT and BERT, are deep learning marvels designed specifically for understanding and generating text that feels human. Their secret weapon? The transformer architecture with self-attention mechanisms that capture language context exceptionally well.

For example, type “The capital of France is” into a system powered by an LLM, and it will promptly finish with “Paris.” The model doesn’t just memorize facts; it learns patterns from vast datasets and predicts the most logical next word. Its applications stretch beyond simple text autocomplete—think translation, summarization, and sentiment analysis.

Quick Intro to GANs: A Different Style of Creation

In 2014, Ian Goodfellow introduced a clever concept called Generative Adversarial Networks (GANs). Unlike LLMs, GANs have a duo—two neural nets locked in a competition that pushes each to become better.

- Generator: Produces synthetic data, like an artist creating fake paintings.

- Discriminator: The critic who tells if the artwork is real or a forgery.

This “adversarial” game continues until the generator fabricates data so convincing the discriminator struggles to tell it apart from genuine data. GANs shine in tasks like image synthesis, video generation, and producing high-quality visuals for creative industries.

Key Architectural Differences

LLMs rely on a single transformer-based neural network. Its job? Decode and predict sequences of words. The model processes text as sequences, exploring relationships within sentences using self-attention. Think of it as reading a novel and guessing the next sentence based on context.

GANs play a two-player game. The generator creates fake data, and the discriminator judges. Training is adversarial, meaning both improve by trying to outsmart each other. While some GANs can handle text, their traditional forte is images and videos—non-sequential data types.

Training Techniques: Different Recipes for Different Outcomes

| LLMs | GANs |

|---|---|

|

|

LLM training is straightforward: feed in text, learn patterns, get better at prediction. GAN training is more of a dramatic showdown: two networks are constantly learning and adapting to defeat each other’s tactics.

Output Differences: Text Versus Synthetic Data

When you see words streaming out of ChatGPT, that’s an LLM. These models generate fluent, contextually relevant, and coherent text based on the input.

GANs, on the other hand, don’t write prose; they create realistic images, videos, and other data types. The output is novel but mimics the real data it has seen during training—not just a sequence learning problem.

Applications Reflect Their Strengths

- LLMs power chatbots, automatic translations, content creation like blog writing, and even sentiment analysis. Their prowess lies in understanding and generating human language.

- GANs excel in artistic domains, including generating photorealistic images, video creation for entertainment, data augmentation for machine learning, and super-resolution imaging.

So, Why Do Some People Get Confused?

Both LLMs and GANs are “generative.” They create new examples based on learned data. But structure and training separate their DNA. LLMs create sequential, linguistic data via predictive modeling with a single network. GANs rely on adversarial competition between two networks focused primarily on visual or non-sequential data.

However, the AI community sometimes tries to combine both approaches. For example, leveraging GAN frameworks to enhance text generation quality or security testing of LLMs. These hybrids don’t merge their identities but rather borrow each other’s strengths for novel applications.

A Real-World Analogy

Think of LLMs as master storytellers having read millions of books, generating coherent tales based on patterns of language. GANs are more like talented forgers crafting paintings that look so real, critics can’t tell the difference.

Both produce new content but specialize in vastly different crafts.

What About the Future? Could LLMs Evolve Into GANs?

The short answer is probably not. Their core mechanics are just too different. But AI evolution often surprises us. Hybrid models, like combining adversarial training elements in language models, might emerge more frequently.

Imagine LLMs that can internally ‘challenge’ their outputs to improve quality—sort of an in-house discriminator—but that doesn’t make them GANs. It’s just adding flavors from adversarial methods onto fundamentally different architectures.

Wrapping It Up

- LLMs and GANs serve distinct purposes and operate on different principles.

- LLMs are single-network text prediction and generation systems.

- GANs rely on two networks competing to generate realistic non-sequential data.

- They are not interchangeable, and an LLM is definitely not a type of GAN.

- Combining their capabilities is an exciting frontier but doesn’t blur the distinction.

“Is LLM a type of GAN?” No—and understanding their difference helps appreciate the diversity in generative AI technologies.

Curious to explore more AI puzzles? What other AI models have you heard about that seem similar but aren’t? Drop your questions below!

Is an LLM a type of Generative Adversarial Network (GAN)?

No, an LLM is not a type of GAN. LLMs are transformer-based models designed for text prediction and understanding, while GANs consist of two networks competing to generate realistic synthetic data.

How do the training methods of LLMs and GANs differ?

LLMs are trained to predict the next word in a sequence using backpropagation and labeled text. GANs train two networks simultaneously in an adversarial game to generate and distinguish fake data from real data.

Do LLMs use the generator-discriminator architecture like GANs?

No. LLMs use a transformer architecture focused on self-attention. GANs require a generator to create data and a discriminator to judge its authenticity, which is not a part of LLMs.

What type of outputs do LLMs and GANs produce?

LLMs generate coherent text sequences based on input prompts. GANs produce new, realistic data instances like images or videos that resemble the training data but are not exact copies.

Can GANs be used for text generation like LLMs?

GANs are mostly used for non-text data such as images and videos. Text generation tasks rely on LLMs with transformer architectures rather than GAN frameworks.