Understanding the Importance of Evaluating Fine-Tuned LLMs

Oh, evaluating fine-tuned Large Language Models (LLMs) can feel a bit like judging a robot talent show! 🤖🎤 You’ve got to make sure those models shine brighter than a disco ball in real-world scenarios. And lucky for you, I have all the insider tips on how to get down to the nitty-gritty of LLM evaluation. Let’s dive in!

Fine-tuning LLMs is like giving them a personalized makeover — you adapt a pre-trained model to excel in a specific task or domain. But the real magic happens when you need to assess how well your model has learned its fancy new tricks. That’s where LLM evaluation steps onto the stage with glitter and glam.

So, picture this: you’ve fine-tuned your LLM, and now you want to see if it’s ready for its grand performance in the real world. Cue the dramatic music because it’s time for an evaluation showdown!

Understanding the Importance of Evaluating Fine-Tuned LLMs

When it comes to evaluating those beautifully enhanced language models, think of it as checking if your plant babies are thriving after some extra TLC. It’s essential to ensure your LLM isn’t just all bark and no byte!

By running an LLM evaluation, you’re basically giving your model a report card on how well it performs when faced with different tasks or scenarios out there in the big bad world.

So, How Does the LLM Eval Module Work?

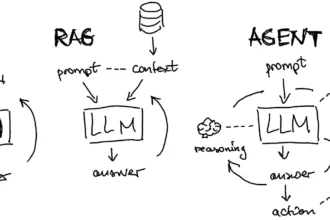

The LLM Eval module puts your fine-tuned models through their paces by comparing their predicted answers against reference strings. It’s like setting up a showdown between a super-smart AI judge and your model’s responses.

It measures accuracy by seeing how closely those predictions match up with the ground-truth answers—kind of like checking if your ‘smart’ pet really understands those funny cat memes or just pretends to!

Evaluation Templates at Your Service

Now, let’s talk templates! 1. LLM-as-a-Judge: Ever thought about letting one smart AI judge another? This template uses that concept precisely! It helps measure aspects like relevance, creativity, correctness – all essential traits for top-notch word wizardry.

- TruthfulQA: Here things get spicy! This tests if your model is spinning fake news or keeping it real.While some models might be great at translating human texts accurately (BLEU), others might ace summarization even better(ROUGE).

- General Template: A classic choice for evaluating language models using NLP metrics like F1 Score & Exact Match.It ensures your model doesn’t just throw buzzwords around but truly grasps what needs asking and answering.

- Customized Delights: Sometimes off-the-shelf just doesn’t cut it! With custom templates, you have the freedom to tailor evaluations according to YOUR rules and priorities.Creative freedom at its finest!

Savvy enough yet? Oh wait…there’s more fun ahead! So buckle up because we’re about to dive into practical insights on handling these fine-tuned beasts effectively! Keep reading on; more exciting info awaits around evaluating these magical language models.

Table of Contents

ToggleStep-by-Step Guide to Fine-Tuning LLMs

Now, let’s break it down step-by-step on how to fine-tune Large Language Models (LLMs) like a pro! Fine-tuning an LLM is like giving it a personalized makeover. Imagine you’re taking a generic model and tailoring it to excel in a specific task or domain. It’s like transforming your plain vanilla robot into one with some serious pizzazz and skills for your unique needs. So, here’s your backstage pass to mastering the art of fine-tuning:

- Single-Task vs. Multi-Task Optimization: First up, you need to decide if you want your LLM to focus on one particular task or juggle multiple tasks like a language-loving circus performer. Single-task optimization involves training the model solely on one task, ensuring it becomes an expert in that specific area. On the other hand, multi-task optimization trains the model on various tasks simultaneously, making it a versatile all-rounder in handling different challenges.

- Scaling Instruction Models: Think of this as giving your LLM growth hormones but without the awkward teenage phase! Scaling instruction models involve adjusting parameters and hyperparameters to enhance and refine the model’s learning capabilities further. It’s like fine-tuning an instrument for perfect harmony — each tweak helps your model sing its best notes.

- Benchmarking Brilliance: Picture this as the ultimate showdown where your fine-tuned LLM gets its chance to shine brightly under the spotlight. Benchmarking allows you to compare your model’s performance against standardized benchmarks and custom criteria — think of it as judging a language Olympics where only those with top-notch abilities get gold medals!

So, why go through all this trouble of fine-tuning? Well, just like how a tailored suit fits better than off-the-rack clothes, fine-tuned LLMs deliver impeccable responses customized for specific tasks or domains — ideal for industries like science, law, medicine, or any field requiring unique language mastery.

Remember, when it comes to fine-tuning LLMs, precision is key! It’s about crafting not just any response but the perfect response matched precisely for your requirements! So grab that data by the bytes and dive into those parameters – because with great power comes exceptional results!

Tools and Methods for Evaluating Fine-Tuned LLMs

In the dazzling world of Fine-Tuned Large Language Models (LLMs), it’s not just about giving your model an A+ makeover, but also about critically evaluating its performance like a seasoned judge on a talent show. This evaluation phase is where the rubber meets the road and where you separate the star performers from the forgettable ones!

Now, when it comes to tools and methods for evaluating your fine-tuned LLMs, think of it as setting up a fierce showdown between your model and real-world scenarios. At Clarifai, we offer the LLM Eval module – your trusty sidekick in assessing if your model can walk the talk in practical situations. This module acts as a guiding light, helping you pinpoint where your LLM shines bright like a diamond and where it may need a little polish.

When diving into evaluating fine-tuned LLMs, metrics are your best pals in this journey. From cross-entropy to precision, recall to F1-score, these metrics act as judges scoring each aspect of your model’s performance – ensuring it doesn’t just look good on paper but delivers top-notch responses when facing real-world tasks. And here’s a nifty tip: Just like how judging criteria vary in talent shows, different evaluation templates serve specific purposes for your LLMs! Whether you’re testing response completeness or measuring relevance, these templates act like cheat codes guiding you through the evaluation process without breaking a sweat.

So gear up with these evaluation tools at hand and start rating those fine-tuned language models with swagger because when it comes to evaluating LLMs, precision and thoroughness pave the path from average to exceptional performance!

Best Practices for Fine-Tuning LLMs for Specific Tasks

When it comes to fine-tuning Large Language Models (LLMs) for specific tasks, the key lies in realizing why fine-tuning is essential. While LLMs are like jack-of-all-trades, capable of handling various natural language tasks, their ability to excel in specific scenarios can be a hit or miss. Fine-tuning steps in when your task is unique to your business and needs consistent high performance. It’s like giving your LLM a custom-made outfit tailored precisely for the occasion! Let’s explore some best practices for fine-tuning LLMs and optimizing them like a pro:

- Identifying Specific Task Requirements: Before diving into fine-tuning, pinpoint what makes your task unique or challenging. Understanding the nuances of your task will guide you in effectively customizing your LLM for optimal performance.

- Fine-Tuning Enhancements: Imagine fine-tuning as adding the secret ingredients to make a dish extraordinary! This process refines the model’s existing knowledge base by adapting it to new data and requirements. It’s like giving your model a makeover tailored to meet its performance goals.

- Domain-Specific Fine-Tuning: If your business operates in niche fields like science, law, or medicine, consider domain-specific fine-tuning to ensure accurate responses aligned with industry standards. This approach helps your LLM cater specifically to the intricacies of these specialized domains.

- Achieving Consistent Results: The beauty of fine-tuning lies in consistency; by tweaking and refining your model based on feedback and observed performance results, you can ensure reliable and dependable responses tailored for precise tasks.

- Continuous Evaluation: Just like how you taste-test during cooking, evaluating the effectiveness of your fine-tuned model is crucial! Keep testing its responses against real-world scenarios to guarantee it delivers top-notch results consistently.

Remember, when it comes to refining LLMs for specific tasks through fine-tuning, attention to detail makes all the difference! Think of it as crafting a bespoke suit – each adjustment ensures that the final product fits perfectly with unmatched style and functionality tailored just for you.

- Evaluating fine-tuned LLMs is crucial to ensure their performance in real-world scenarios.

- Fine-tuning LLMs involves adapting pre-trained models for specific tasks or domains.

- LLM evaluation involves comparing predicted answers against reference strings to measure accuracy.

- The LLM Eval module functions like a showdown between a smart AI judge and the model’s responses.

- Templates like LLM-as-a-Judge and TruthfulQA help assess traits like relevance, creativity, and correctness in fine-tuned LLMs.