When I opened my inbox, I was greeted by an exciting email from OpenAI: “Introducing new reasoning models: OpenAI o1-preview and o1-mini.” As a developer in the top usage tier (Tier 5), I was invited to join the exclusive beta testing for the o1 series.

Without hesitation, I jumped into experimenting. I knew a few key things:

OpenAI had just announced an entirely new model series and dropped the “GPT” branding—meaning there won’t be a GPT-5. The community was already buzzing about the new “Strawberry” model, and now it had officially arrived.

The o1-preview is OpenAI’s most advanced reasoning model to date, while o1-mini focuses on fast reasoning and lightweight tasks.

Table of Contents

ToggleWhat is the o1-preview Model?

After months of cryptic social media teasers and under the codename “Project Strawberry,” OpenAI’s much-anticipated new model has finally been unveiled — the ‘o1’ series.

The naming may seem unconventional. Why not GPT-5 or GPT-4.1? According to OpenAI, the advancements in this new model family are so groundbreaking that they decided to start fresh, resetting the series back to “1.”

For complex reasoning tasks, the o1 models mark a substantial leap in AI capability. OpenAI emphasizes that these models think and reason more effectively, solving more intricate problems than previous iterations. While they might not be the fastest in execution, they deliver far more logical and reliable answers.

The o1 Model Family

The o1 series is divided into three key variants:

- o1-preview: A preview of the most advanced model in the o1 family, representing a significant milestone in AI reasoning. This model pushes the boundaries of what AI can accomplish in terms of logical thinking and complex problem-solving.

- o1-mini: A smaller, faster, and more cost-effective variant, o1-mini excels in reasoning tasks, particularly in coding. It’s 80% cheaper than o1-preview, making it ideal for applications requiring quick reasoning without needing a vast amount of world knowledge.

- o1-regular: Though technically ready, o1-regular is restricted to a niche user base, available through a specialized subscription at approximately $2000 per month. This version offers advanced capabilities to an exclusive tier of users.

Why Does “Reasoning” Matter in LLMs?

These new models emphasize the ability to reason through complex tasks, a shift from simply processing language to truly understanding and solving intricate problems. Trained with reinforcement learning, the o1 models aim to bring deeper intelligence and logic to large language models (LLMs). OpenAI’s focus on reasoning suggests that future AI systems will not just generate responses but will engage in thoughtful, logical problem-solving across diverse domains.

How Does Reasoning Work?

Similar to how humans take time to think before answering a difficult question, the o1 model employs a “chain of thought” process when tackling complex problems. It doesn’t just jump to conclusions but instead thoughtfully analyzes each step.

The model learns from its mistakes, refining its approach over time. It breaks down complex tasks into simpler, more manageable steps and adapts its strategy if the initial approach proves ineffective.

The essence of reasoning lies in the model’s ability to evaluate multiple strategies before delivering a final response.

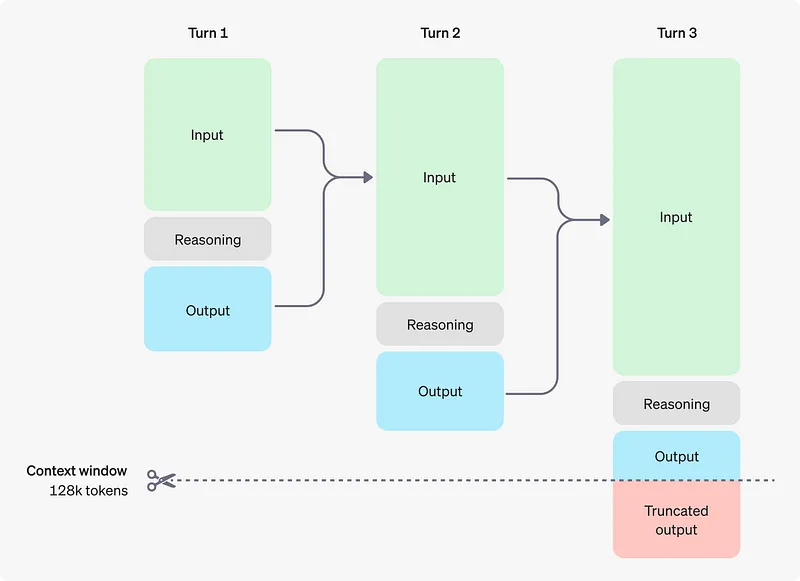

Here’s how it works:

- Generate reasoning tokens: The model generates reasoning steps internally.

- Produce visible completion tokens: It then outputs a final answer based on this reasoning process.

- Discard reasoning tokens: These reasoning steps are discarded from the context, keeping the focus on essential information.

While the reasoning tokens are not visible through the API, they still take up space in the model’s context window and are billed as output tokens. Discarding them ensures that the model’s context remains streamlined.

Although this process may be slower, it aligns with the growing paradigm of scaling inference time, as noted by NVIDIA senior researcher Jim Fan. This method is now being implemented in real-world applications.

Here we highlights several key insights:

- You don’t need massive models for reasoning: Many parameters in large models are used for memorizing facts (e.g., for tasks like trivia QA). However, reasoning can be separated from knowledge, allowing for a smaller, specialized “reasoning core” that knows how to use external tools like a browser or code verifier. This can reduce the need for extensive pre-training compute.

- Inference-time scaling shifts compute focus: Instead of dedicating immense resources to pre- or post-training, a significant portion of compute is now allocated to real-time inference. Large language models act as text-based simulators, exploring various strategies and scenarios. Like AlphaGo’s Monte Carlo Tree Search (MCTS), this simulation process eventually leads the model to converge on optimal solutions.

How Does o1 Compare to GPT-4o?

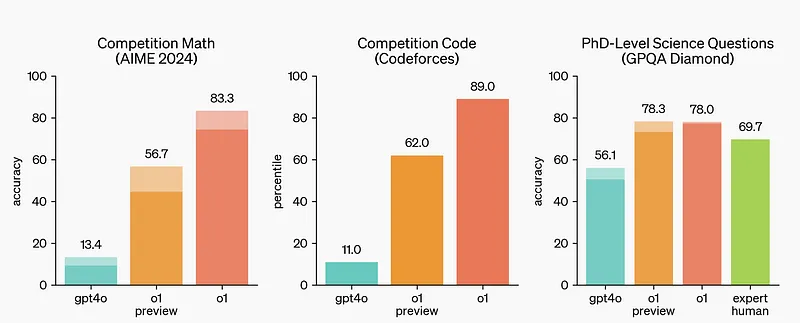

To assess how the o1 models measure up against GPT-4o, OpenAI conducted a wide range of tests using human exams and machine learning benchmarks.

The results, as illustrated in the graph, show that o1 significantly outperforms GPT-4o on complex reasoning tasks, particularly in areas like mathematics, coding, and science.

One standout benchmark was the GPQA-diamond, an advanced intelligence test designed to evaluate expertise in subjects like chemistry, physics, and biology. During the evaluation, OpenAI enlisted PhD experts to answer the same GPQA-diamond questions, allowing for a direct comparison between human performance and the o1 model.

Amazingly, o1 surpassed these human experts, becoming the first model to achieve such a feat on this benchmark. While this doesn’t mean that o1 outperforms PhDs in every aspect, it does highlight the model’s ability to excel in specific problem-solving areas where expert-level knowledge is required.

For those interested, OpenAI has published a detailed technical report on the o1 models, which you can explore further.

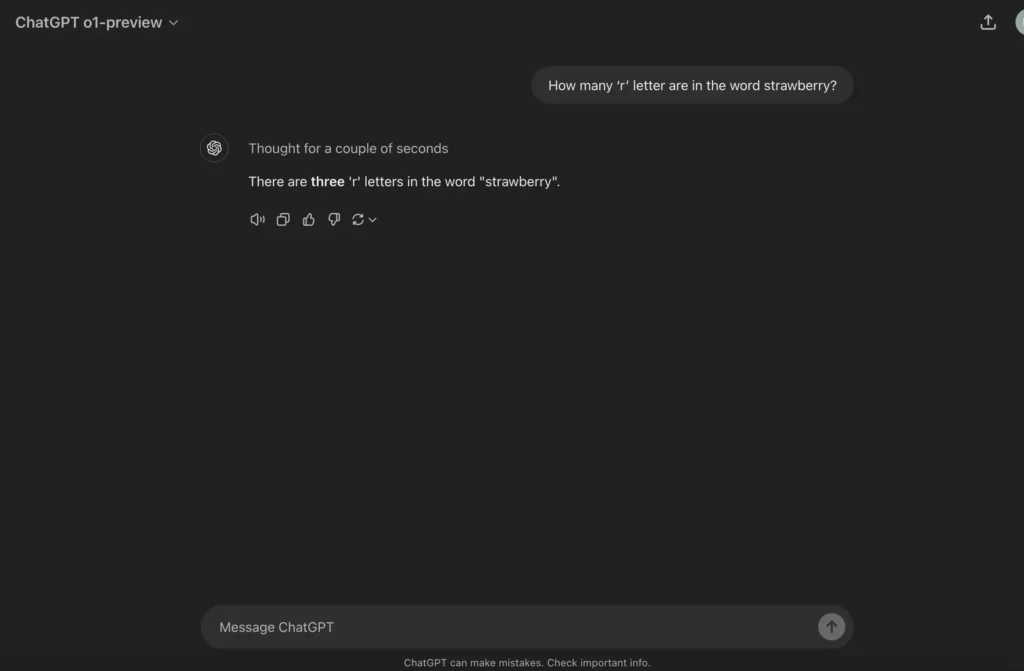

To further demonstrate o1’s capabilities compared to GPT-4o, consider a simple but illustrative test: counting the number of ‘r’s in the word “strawberry.”

Prompt 1 : The strawberry

Prompt: How many ‘r’ letter are in the word strawberry?

There are 3 ‘r’s in “s”t”r”a”w”b”e”r”r”y”. >If you insert characters to breaks the tokens down, it find the correct result: how many r’s are in “s”t”r”a”w”b”e”r”r”y” ? The issue is that humans don’t talk like this. I don’t ask someone how many r’s there are in strawberry by spelling out strawberry, I just say the word. (more about the problem)

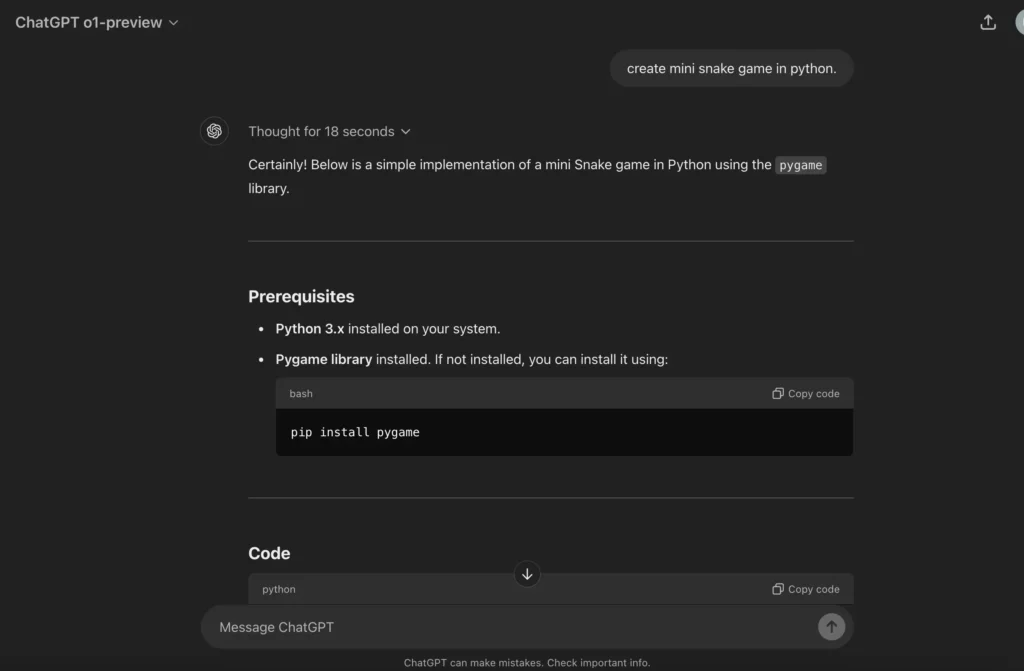

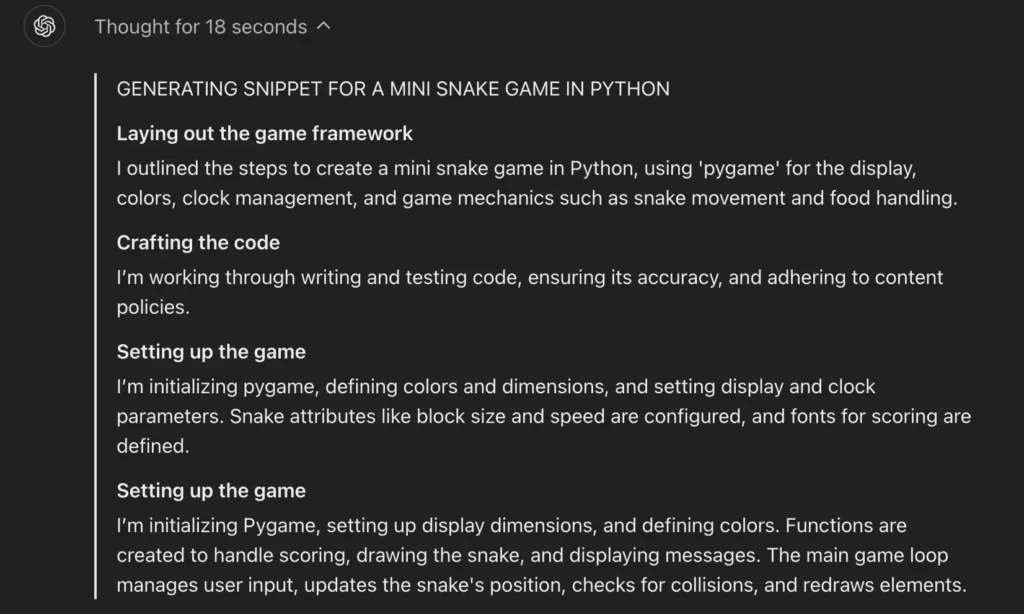

Prompt 2 : Snake game in Python

Let’s try another one. This time, we’ll ask both models to come up with

Prompt: create mini snake game in python.

This section illustrates the model’s “chain of thought” or reasoning process, where it carefully considers and selects the most effective steps to accomplish a given task. This thought process can move both forward and backward, allowing the model to revisit and revise previously chosen steps to ensure the best possible outcome.

Here is the complete output from o1-preview :

o1-preview answer

Certainly! Below is a simple implementation of a mini Snake game in Python using the `pygame` library.

---

### **Prerequisites**

- **Python 3.x** installed on your system.

- **Pygame library** installed. If not installed, you can install it using:

```bash

pip install pygame

```

---

### **Code**

```python

import pygame

import time

import random

# Initialize Pygame

pygame.init()

# Define colors (R, G, B)

white = (255, 255, 255)

yellow = (255, 255, 102)

black = (0, 0, 0)

red = (213, 50, 80)

green = (0, 255, 0)

blue = (50, 153, 213)

# Set display dimensions

dis_width = 600

dis_height = 400

# Create the display window

dis = pygame.display.set_mode((dis_width, dis_height))

pygame.display.set_caption('Snake Game by ChatGPT')

# Set up the game clock

clock = pygame.time.Clock()

# Set snake size and speed

snake_block = 10

snake_speed = 15

# Set up fonts for text

font_style = pygame.font.SysFont("bahnschrift", 25)

score_font = pygame.font.SysFont("comicsansms", 35)

def display_score(score):

"""Display the current score."""

value = score_font.render("Your Score: " + str(score), True, yellow)

dis.blit(value, [0, 0])

def draw_snake(snake_block, snake_list):

"""Draw the snake on the screen."""

for x in snake_list:

pygame.draw.rect(dis, black, [x[0], x[1], snake_block, snake_block])

def message(msg, color):

"""Display a message on the screen."""

mesg = font_style.render(msg, True, color)

dis.blit(mesg, [dis_width / 6, dis_height / 3])

def game_loop():

"""Main function to run the game loop."""

game_over = False

game_close = False

# Initial position of the snake

x1 = dis_width / 2

y1 = dis_height / 2

# Initial movement

x1_change = 0

y1_change = 0

# Snake body

snake_List = []

Length_of_snake = 1

# Initial position of the food

foodx = round(random.randrange(0, dis_width - snake_block) / 10.0) * 10.0

foody = round(random.randrange(0, dis_height - snake_block) / 10.0) * 10.0

while not game_over:

while game_close:

dis.fill(blue)

message("You Lost! Press C-Play Again or Q-Quit", red)

display_score(Length_of_snake - 1)

pygame.display.update()

# Event handling for game over options

for event in pygame.event.get():

if event.type == pygame.KEYDOWN:

if event.key == pygame.K_q:

game_over = True

game_close = False

if event.key == pygame.K_c:

game_loop()

if event.type == pygame.QUIT:

game_over = True

game_close = False

# Event handling for snake movement

for event in pygame.event.get():

if event.type == pygame.QUIT:

game_over = True

if event.type == pygame.KEYDOWN:

if event.key == pygame.K_LEFT:

x1_change = -snake_block

y1_change = 0

elif event.key == pygame.K_RIGHT:

x1_change = snake_block

y1_change = 0

elif event.key == pygame.K_UP:

y1_change = -snake_block

x1_change = 0

elif event.key == pygame.K_DOWN:

y1_change = snake_block

x1_change = 0

# Check boundaries

if x1 >= dis_width or x1 < 0 or y1 >= dis_height or y1 < 0:

game_close = True

# Update position

x1 += x1_change

y1 += y1_change

dis.fill(blue)

pygame.draw.rect(dis, green, [foodx, foody, snake_block, snake_block])

# Snake growth mechanism

snake_Head = []

snake_Head.append(x1)

snake_Head.append(y1)

snake_List.append(snake_Head)

if len(snake_List) > Length_of_snake:

del snake_List[0]

# Check collision with self

for x in snake_List[:-1]:

if x == snake_Head:

game_close = True

draw_snake(snake_block, snake_List)

display_score(Length_of_snake - 1)

pygame.display.update()

# Check if food is eaten

if x1 == foodx and y1 == foody:

foodx = round(random.randrange(0, dis_width - snake_block) / 10.0) * 10.0

foody = round(random.randrange(0, dis_height - snake_block) / 10.0) * 10.0

Length_of_snake += 1

clock.tick(snake_speed)

pygame.quit()

quit()

game_loop()

```

---

### **How to Run the Game**

1. **Save the Code:**

- Copy the code into a file and save it as `snake_game.py`.

2. **Run the Game:**

- Open a terminal or command prompt.

- Navigate to the directory where `snake_game.py` is saved.

- Run the game using the command:

```bash

python snake_game.py

```

or

```bash

python3 snake_game.py

```

3. **Control the Snake:**

- Use the **arrow keys** on your keyboard to control the direction of the snake.

4. **Game Over Options:**

- When you lose, you can press:

- **'C'** to play again.

- **'Q'** to quit the game.

---

### **Explanation**

- **Initialization:**

- **Import Modules:** We import `pygame`, `time`, and `random`.

- **Pygame Initialization:** Initialize all imported pygame modules.

- **Colors and Display:** Define color variables and set up the game display window.

- **Game Clock:** Create a clock object to manage how fast the screen updates.

- **Snake and Food Settings:** Define the size of the snake blocks and the speed.

- **Functions:**

- **`display_score(score)`:** Displays the current score at the top-left corner.

- **`draw_snake(snake_block, snake_list)`:** Draws each segment of the snake.

- **`message(msg, color)`:** Displays messages like game over prompts.

- **Main Game Loop (`game_loop`):**

- **Game Over Handling:** Provides options to quit or restart when the game is over.

- **Event Handling:** Captures user inputs for controlling the snake and exiting the game.

- **Movement Logic:** Updates the position of the snake based on user input.

- **Boundary Checking:** Ends the game if the snake hits the boundaries.

- **Food Consumption:** Increases the length of the snake when it eats food and spawns new food.

- **Self-Collision Detection:** Ends the game if the snake collides with itself.

- **Screen Update:** Refreshes the game display with new positions and updates.

---

### **Tips for Enhancement**

- **Add Sound Effects:** Incorporate sounds for food consumption and game over events.

- **Improved Graphics:** Use images for the snake and food instead of plain rectangles.

- **Levels and Difficulty:** Increase the snake speed as the score increases to make the game more challenging.

- **Obstacles:** Add obstacles that the snake must avoid.

- **Scoreboard:** Implement a high-score feature that saves the highest score achieved.

---

Enjoy your mini Snake game!GPT-4o answer

Not bad at all. L’et’s now compare the output of the same prompt to GPT-4o:

Here's a simple implementation of a "Snake" game using Python and the `pygame` library. The game consists of a snake that moves around the screen, collecting food. Every time the snake eats food, it grows longer. The game ends when the snake collides with itself or the screen edges.

### Install pygame

If you don't have the `pygame` library installed, you can install it using pip:

```bash

pip install pygame

```

### Mini Snake Game in Python

```python

import pygame

import time

import random

# Initialize Pygame

pygame.init()

# Define colors

WHITE = (255, 255, 255)

BLACK = (0, 0, 0)

RED = (213, 50, 80)

GREEN = (0, 255, 0)

BLUE = (50, 153, 213)

# Display settings

WIDTH = 600

HEIGHT = 400

DISPLAY = pygame.display.set_mode((WIDTH, HEIGHT))

pygame.display.set_caption('Snake Game')

# Clock and snake settings

clock = pygame.time.Clock()

SNAKE_BLOCK = 10

SNAKE_SPEED = 15

# Fonts

font_style = pygame.font.SysFont("bahnschrift", 25)

score_font = pygame.font.SysFont("comicsansms", 35)

# Display score function

def show_score(score):

value = score_font.render("Your Score: " + str(score), True, BLACK)

DISPLAY.blit(value, [0, 0])

# Draw the snake

def draw_snake(snake_block, snake_list):

for x in snake_list:

pygame.draw.rect(DISPLAY, GREEN, [x[0], x[1], snake_block, snake_block])

# Display a message in the middle of the screen

def message(msg, color):

mesg = font_style.render(msg, True, color)

DISPLAY.blit(mesg, [WIDTH / 6, HEIGHT / 3])

# Main game loop

def game_loop():

game_over = False

game_close = False

# Starting position of the snake

x1 = WIDTH / 2

y1 = HEIGHT / 2

# Change in position

x1_change = 0

y1_change = 0

# Snake body

snake_list = []

snake_length = 1

# Random food position

foodx = round(random.randrange(0, WIDTH - SNAKE_BLOCK) / 10.0) * 10.0

foody = round(random.randrange(0, HEIGHT - SNAKE_BLOCK) / 10.0) * 10.0

while not game_over:

while game_close:

DISPLAY.fill(BLUE)

message("You Lost! Press Q-Quit or C-Play Again", RED)

show_score(snake_length - 1)

pygame.display.update()

# Game over or restart

for event in pygame.event.get():

if event.type == pygame.KEYDOWN:

if event.key == pygame.K_q:

game_over = True

game_close = False

if event.key == pygame.K_c:

game_loop()

# Detect keystrokes

for event in pygame.event.get():

if event.type == pygame.QUIT:

game_over = True

if event.type == pygame.KEYDOWN:

if event.key == pygame.K_LEFT:

x1_change = -SNAKE_BLOCK

y1_change = 0

elif event.key == pygame.K_RIGHT:

x1_change = SNAKE_BLOCK

y1_change = 0

elif event.key == pygame.K_UP:

y1_change = -SNAKE_BLOCK

x1_change = 0

elif event.key == pygame.K_DOWN:

y1_change = SNAKE_BLOCK

x1_change = 0

# Move snake

if x1 >= WIDTH or x1 < 0 or y1 >= HEIGHT or y1 < 0:

game_close = True

x1 += x1_change

y1 += y1_change

DISPLAY.fill(WHITE)

# Draw the food

pygame.draw.rect(DISPLAY, RED, [foodx, foody, SNAKE_BLOCK, SNAKE_BLOCK])

# Snake movement

snake_head = []

snake_head.append(x1)

snake_head.append(y1)

snake_list.append(snake_head)

if len(snake_list) > snake_length:

del snake_list[0]

# Check for collisions with itself

for x in snake_list[:-1]:

if x == snake_head:

game_close = True

# Draw the snake

draw_snake(SNAKE_BLOCK, snake_list)

show_score(snake_length - 1)

pygame.display.update()

# Snake eats food

if x1 == foodx and y1 == foody:

foodx = round(random.randrange(0, WIDTH - SNAKE_BLOCK) / 10.0) * 10.0

foody = round(random.randrange(0, HEIGHT - SNAKE_BLOCK) / 10.0) * 10.0

snake_length += 1

clock.tick(SNAKE_SPEED)

pygame.quit()

quit()

# Start the game

game_loop()

```

### How the Game Works:

- The snake moves continuously in the direction controlled by the arrow keys.

- The game generates food at random locations on the screen.

- The snake grows longer each time it eats food, and the score increases.

- The game ends if the snake collides with the wall or itself.

### Controls:

- Use the arrow keys to control the snake's movement.

- Press `C` to play again or `Q` to quit after a game-over.

Make sure you have the `pygame` library installed and run the script to play the game!Observations

- Both codes use

pygameto implement a classic Snake game, with similar mechanics and structures, like snake movement, food consumption, and self-collision detection. - o1-preview uses a wider color palette (e.g., yellow for the score and green for the food), whereas GPT-4o keeps the color scheme simpler with primarily green, red, and blue.

- o1-preview initializes the game screen with a blue background during gameplay, while GPT-4o uses a white background for most of the game.

- Both codes have similar functions for displaying the score and rendering text messages, but o1-preview places more emphasis on game-related messages (e.g., “Press C-Play Again or Q-Quit”).

- The variable naming convention differs slightly; o1-preview uses lowercase for constants like

dis_width, while GPT-4o uses uppercase likeWIDTH. - o1-preview includes more detailed comments and explanations for various functions, whereas GPT-4o offers a more concise and streamlined version of the same game logic.

Overall, both codes achieve the same functionality, but o1-preview is more feature-rich and detailed, while gpt-4o is more streamlined and minimalist.

Once again, o1 delivered the correct answer, although it took a bit longer to process the task compared to GPT-4o, reflecting its more thorough reasoning approach.

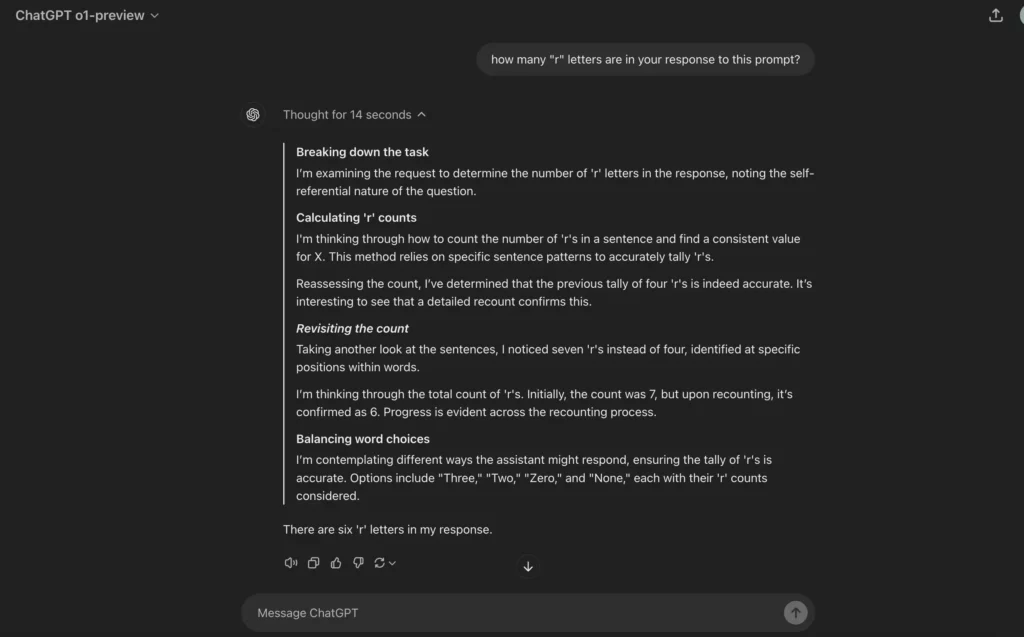

o1 Model is Not Without Flaws

Even OpenAI CEO Sam Altman has acknowledged that o1, while impressive, is still imperfect and has its limitations. While it may seem groundbreaking at first, extended use reveals some areas where the model can still fall short.

here is o1, a series of our most capable and aligned models yet:https://t.co/yzZGNN8HvD

— Sam Altman (@sama) September 12, 2024

o1 is still flawed, still limited, and it still seems more impressive on first use than it does after you spend more time with it. pic.twitter.com/Qs1HoSDOz1

For instance, o1 can occasionally make errors on straightforward tasks, like counting the number of ‘r’s in its response.

It’s important to note that while o1 brings significant advancements in reasoning, it is not designed to replace GPT-4o in every scenario. For applications requiring image inputs, function calling, or consistently fast response times, GPT-4o and GPT-4o mini remain the more suitable options.

For developers, here are some key API parameters currently unavailable for o1 models:

- Modalities: Supports text only; images are not yet supported.

- Message types: Only user and assistant messages are allowed; system messages are not supported.

- Streaming: Not available.

- Tools: Function calling, tool use, and custom response formatting parameters are not supported.

- Logprobs: Not available.

- Other limitations: Parameters like

temperature,top_p, andnare fixed at 1, whilepresence_penaltyandfrequency_penaltyare set to 0. - Assistants and Batch: o1 models are not yet supported in the Assistants API or the Batch API.

Facts & Key Takeaways

Technological Potential and Future Directions

- The reinforcement learning method applied in “01” allows the AI to perform more complex reasoning through a step-by-step thought process, akin to human problem-solving.

- The ability for “01” to recursively generate reasoning tokens marks a step toward more autonomous AI decision-making without human direction at every step.

- 01’s reliance on reinforcement learning resembles Google’s approach with AlphaProof and AlphaCoder, which have already demonstrated AI superiority in math and coding.

- 01 exemplifies the next phase in AI: advanced models that don’t just understand language but can think through problems systematically like a human, although imperfectly.

- The ability to recursively prompt itself gives “01” a significant edge over previous models, enabling more refined reasoning without extensive human intervention.

- Compared to its predecessors, “01” more closely mimics human cognitive processes, offering a new perspective on AI’s capacity to simulate human thought.

- The significant improvement in coding benchmarks hints at the model’s growing ability to handle real-world programming challenges autonomously, albeit imperfectly.

- The Chain of Thought mechanism enables the model to handle coding challenges more efficiently by assessing problem constraints and logic before providing solutions.

- We acknowledges that while the model is more capable than previous versions, it still hasn’t crossed the threshold into true intelligence or general AI.

Limitations and Criticisms of AI Models

- Despite its innovations, the “01” model isn’t an artificial general intelligence (AGI) or artificial superintelligence (ASI), leaving human intelligence unmatched for now.

- Even though the model outperforms in many tasks, its reasoning chain is hidden from the user, making it difficult to track how the AI arrives at conclusions.

- The improvements in the “01” model raise concerns that its potential may be overstated by venture capital-backed companies seeking investment rather than focusing on tangible results.

- Despite advances, the “01” model still exhibits a high rate of hallucinations, where the AI confidently provides incorrect or nonsensical outputs.

- The model still struggles with specific tasks such as counting letters in words like “strawberry,” highlighting that advanced reasoning doesn’t always equate to flawless performance.

- Although not fundamentally revolutionary, “01” marks a substantial improvement in its ability to self-prompt and reason through complex problems.

- 01’s rapid advancement leads to growing concerns about the long-term societal implications of AI replacing human workers, especially in tech and engineering fields.

- While OpenAI aims to raise more funding, we speculates that internal benchmarks may be exaggerated to attract investors rather than reflecting real-world progress.

- Despite hype, “01” remains a tool rather than a life-changing breakthrough, though it represents a shift toward more sophisticated, self-correcting AI.

Economic and Strategic Factors

- OpenAI continues to maintain closed access to many details about the “01” model, contradicting its original mission of openness and transparency.

- OpenAI has hinted at a $2,000 Premium Plus plan to access the full capabilities of the “01 Regular” model, demonstrating the escalating costs of using cutting-edge AI.

- The timing of “01”’s release reflects OpenAI’s strategic maneuver to regain dominance over competitors like Anthropic’s Claude, which has gained popularity.

- The trade-off between performance and resource consumption raises ethical concerns about who can afford to use these advanced models and the environmental cost of their energy demands.

- OpenAI’s secrecy around “01”’s full capabilities contrasts sharply with its initial mission of openness, raising concerns about the future direction of the company’s ethical standards.

- OpenAI’s continued pursuit of stronger AI through “01” positions the company to dominate the market while deepening reliance on expensive and complex AI models.

More — ChatGPT: The Ultimate Guide to DAN Jailbreak Prompts (DAN 6.0, STAN, DUDE, and the Mongo Tom) & AI Use Cases by Industry: Transforming Operations and Personalized Experiences Across Sectors