Alright, so I know that I’ve been saying something is all you needed a lot, but this time it is honestly different. QLoRA is truly all you need. Personally, I have long been annoyed at how cold, boring, robotic, and just sort of dead the current state of AI conversations are. What I really want is a little personality, a little spice, and I want an AI to sometimes make me laugh. The problem is, where do you get enough data such that a model is both useful and fun like this at the same time?

In this article, we will discuss the concept of QLoRA, a fast and lightweight model fine-tuning technique. QLoRA stands for Quantized Low-Rank Approximation, which has been proven to be an effective method for fine-tuning pre-trained models.

Table of Contents

ToggleQLoRA: Fast and Lightweight Model Fine-Tuning

The traditional methods of training such a model from scratch take months and millions of dollars, or arguably hundreds of millions of dollars of infrastructure. You could also do a traditional fine-tune, but even then, you’re looking at weeks and tens of thousands of dollars. Enter QLoRA or Low Rank Adapters from Microsoft Research. The concept here is actually based on research from Facebook at the time, now Meta, of course. It showed that as you pre-train models, what you’re really doing is lowering dimensionality. The idea here is that after a model has been pre-trained, you can update a condensed version of the weight matrix in fine-tuning.

That’s where QLoRA or Low Rank Adapters come in. The research from Microsoft is based on that research from Meta. The QLoRA paper showed that you could reduce trainable parameters by up to 10,000 times and still be effective for fine-tuning. The proposition from QLoRA is that we can represent that exact same delta weight matrix as we train in far fewer dimensions, assuming a pre-trained model. You can’t simply do this right out of the gate; you need pre-training to lower those dimensions.

Imagine you have a weight matrix that is 10,000 by 10,000. That’s 100 million values contained there. If you wanted to calculate the delta weights, that would be calculating 100 million delta weight values. QLoRA or Low Rank Adapters ask, what if rather than having that single delta weight matrix, what if we had two?

Matrices A and B are reduced in dimensionality, resulting in a significant reduction in the number of values to be processed. When these matrices are multiplied, a Delta weight Matrix is obtained, following the principles outlined in the original QLoRA paper.

Benefits of QLoRA

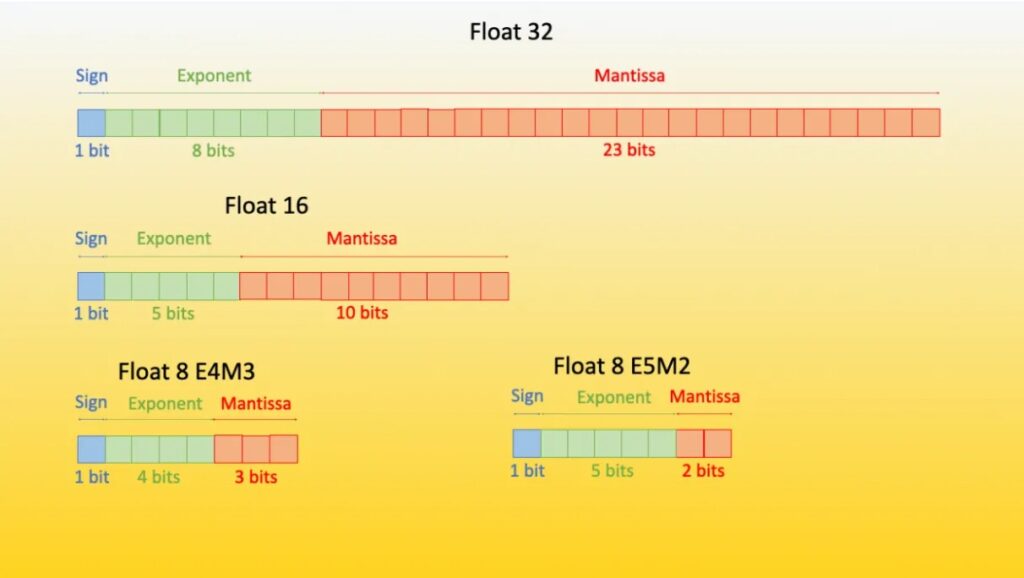

QLoRA offers several advantages, including faster training due to reduced calculations and lower memory requirements. Researchers at the University of Washington have further enhanced QLoRA by introducing quantization, which reduces precision and memory usage even more.

One of the key benefits of QLoRA is the ability to fine-tune models with minimal samples, sometimes as few as a thousand. This flexibility allows for fine-tuning based on specific structures or objectives, using any desired data.

The researchers at the University of Washington have provided a GitHub repository with valuable information and code for training QLoRA models. This accessibility and support make QLoRA a versatile tool for various applications, such as chatbots, code predictors, and generative text models.

Personal experiences with QLoRA have shown its potential for creating customized models, such as adapting the Llama 2.7B chat model using data from the Wall Street Bets subreddit. The possibilities with QLoRA are truly endless, offering a wide range of applications and customization options.

When working with QLoRA, considerations must be made regarding the dataset used and the specific objectives of the fine-tuning process.

Probably capture the character, for example, of a variety of subreddits if that’s what you wanted. But again, you can do anything, and that’s what makes QLoRA so cool because you need such a small amount of data. Arguably, you can just make up your data set; you could hand-create your data set. It’s truly just awesome for the data set that I use. I created and uploaded the Wall Street Bets subreddit data to Hugging Face, so feel free to use that data set if you want.

I did have issues with the sort of end sequence token. To my understanding, with Llama 2 or really all these Llama 2 models, they terminate or have an end-of-sentence token that is just a closing tag like a /S. From my understanding, your training data does not need to include that /S. I did try both with training data that did include it anyway since that’s what the generator like the tokenizer knows, and in both cases, it just never worked. It just never stopped generating.

When I first trained the model, I actually went to do a test inference, and I left for a little bit. When I came back, it was still doing the exact same thing. It had been doing it for like 45 minutes, just trying to generate one sample. It just never reached a stop token. It was very strange.

Anyway, I ended up making my data set with very clear comment response and tags, but these are not the traditional ones. You would probably need to add this maybe to your tokenizer or do something so that the model actually knows how to stop. You can do all kinds of different stop tokens and stop sequences and stuff like that, but mostly I was just trying to get an idea of how QLoRA worked. So this is what I ended up going with, but feel free to make changes. Like I said, you can do anything here.

Also, my dataset here is 118,500 rows or samples, but truly you can do this with far less. I was seeing results after. Just a heads up, I’ve been through about 20 steps, with a batch size of around 40, totaling about 800 samples. It really doesn’t require much at all. I would recommend further pruning down the dataset I used and curating it based on your specific needs. Up to this point, it has been my initial exploration of QLoRA, trying to understand how it works and if it is truly effective. Initially, it seemed unlikely that it could work, so I wanted to test it with a dataset. Keep in mind that this dataset was not meticulously curated; it was simply a pairing of comments and responses.

It is important to note that the model may generate responses that are no longer culturally acceptable, even as far back as 2017. This can be addressed by removing offensive content from the training dataset.

My goal was to create a more engaging chatbot and explore the use of QLoRA with a unique dataset that deviates from typical conversational data. During my research, I came across Yonex’s GPT 4chan model, which is one of the few models banned by Hugging Face and received criticism from numerous researchers for its deployment on 4chan without proper consideration.

The backlash against Yonex’s model was not primarily about its training but more about the way it was released and utilized on 4chan users. My intention was not to engage in similar practices. In this article, we will discuss the concept of QLoRA, a fast and lightweight model fine-tuning technique. QLoRA stands for Quantized Low-Rank Approximation, which has been proven to be an effective method for fine-tuning pre-trained models.

Trainer with Hugging Face has weight decay in it, so every time I run that trainer, I’m resetting the learning rate. It decays down, jumps back up, decays down, then jumps right. Sometimes this behavior is desired, but if unintended, it can be a mistake. Keep in mind that my method may not be optimal due to the fluctuating learning rate. Despite this, the loss continues to decrease. To conduct the training, I utilized an h100 server on the Lambda Cloud.

Their GPUs are cost-effective, making them a popular choice. However, availability can be limited, requiring users to utilize tools like GPU sniper via the API to secure a spot. While other GPUs are available, the h100 is often in high demand.

Training QLoRA, such as Llama27B, can be achieved on commonly available GPUs like 8 or 10 series. For those interested in sniping an h100, a script is provided for assistance. Additionally, a simple shell script is available for installing necessary packages to begin the process. With the h100 on Lambda, I successfully conducted QLoRA fine-tuning after extensive testing and adjustments.

The project took approximately a day or two, but the actual time required to fully QLoRA fine-tune a model is just a matter of hours. The efficiency of QLoRA in delivering results quickly is remarkable, especially when paired with the high-speed h100 GPU.

QLoRA: The Adapter Concept

One fascinating aspect of QLoRA is the concept of the adapter. As you train a model, the adapter, which is a smaller condensed version of the model, is saved. This adapter is then utilized during the inference process. For a 7B model, the size of the adapter is a mere 160 megabytes. This compact size opens up a world of possibilities for various applications. By attaching the adapter to the model, you instantly have the behavior of the new fine-tuned model.

This innovation has led to a current project I am involved in with the team at Skunk Works. We are training a mixture of experts, where each expert is represented by these QLoRA adapters. By utilizing these adapters, you can easily switch between experts. This approach eliminates the need to fine-tune multiple 7B models, each of which could consume a significant amount of memory, whether on your GPU or RAM.

By employing QLoRA adapters, the memory footprint is drastically reduced. Instead of dealing with large models, each fine-tuned llama2 model is only 160 or 200 megabytes in size. Furthermore, by increasing the number of experts while decreasing the model size, you can achieve even smaller memory requirements. This flexibility allows for the utilization of numerous experts, enhancing the efficiency and effectiveness of the models.

When using models like GPT-4, the possibilities for education and knowledge exploration are vast. With QLoRA, the potential for creating diverse and specialized models is immense. In this article, we will discuss the concept of QLoRA, a fast and lightweight model fine-tuning technique. QLoRA stands for Quantized Low-Rank Approximation, which has been proven to be an effective method for fine-tuning pre-trained models.

When interacting with QLoRA, you are likely utilizing only a small fraction of its full potential. By swapping out adapters, the process is incredibly fast, opening up new possibilities that may not have been previously considered. Imagine being able to generate outputs from your model that you appreciate; you can run the model in its quantized form with the adapter immediately. However, if you wish to share the model for others to use at their preferred precision or convert it to a different position, you will need to de-quantize and merge the model.

To accomplish this, a specific code can be used. It is essential to ensure that the code is saving the final merged model correctly. Upon reviewing the script, it appears that the code has been updated to address this issue. Once the merging process is completed, you can upload the model to Hugging Face and share it with others. I have personally uploaded adapters and full models for the Wall Street Bets fine-tunes of Llama 2.7B and 13B. Both models have shown similar results in terms of fine-tuning analysis.

It is worth nothing that these models may not receive extensive usage as they were primarily experimental. Further improvements may be necessary before widespread adoption. Despite this, the resulting models offer enhanced performance and are more refined, appealing to a broader audience.

It’s just amazing how fast, easy, and simple QLoRA fine-tuning really is. As I started diving into this, I realized that QLoRA is something that many people may not be paying attention to. In terms of fine-tuning and lightweight operations, I believe there are significant advancements on the horizon for this style of training models.

QLoRA is truly all you need for fast and lightweight model fine-tuning.