Understanding Transformer LLMs

Ah, large language models (LLMs) — the big brains of the AI world! To put it simply, imagine LLMs as the wizards of the language realm, capable of understanding and generating text like never before. Now, let’s dive into the world of Transformer LLMs and demystify how these incredible models work their magic!

Let’s break it down step-by-step for you:

First off, what exactly are these Large Language Models we’re talking about? Well, they’re essentially massive deep learning models that have been pre-trained on vast amounts of data. Picture them as linguistic giants armed with powerful Transformers — neural networks that excel at deciphering the relationships between words and phrases in a given text.

Saviez-vous: Transformers process sequences in parallel, unlike older models that tackled inputs sequentially. This parallel processing ability not only speeds up training but also allows for dealing with massive datasets efficiently using GPUs.

Now, here’s why these large language models matter: they are incredibly versatile! From answering questions to summarizing documents, translating languages to completing sentences, LLMs can wear many hats and revolutionize how we interact with technology.

But how exactly do these marvels of technology function? The secret lies in their word representations. By using multi-dimensional vectors known as word embeddings, transformers preprocess text into numerical forms. This enables them to understand contextual meanings and relationships between words, paving the way for generating coherent outputs.

Practical Tips and Insights: Large Language Models can be a game-changer in various fields:

1.Copywriting: Imagine having AI assistants like ChatGPT or Claude crafting compelling copy or tweaking sentences for stylistic perfection. 2.Knowledge base answering: Delve into realms where LLMs assist in extracting specific information from digital archives with ease. 3.Text classification: Utilize LLMs for tasks such as customer sentiment analysis or document categorization based on topics. 4.Code generation: Let LLMs flex their coding skills by automatically generating scripts in various programming languages. 5.Text generation: Unleash their creativity as they complete sentences or weave captivating tales effortlessly.

And if you’re wondering how these titans are trained: It’s a meticulous process involving colossal neural networks with billions of parameters. Training them requires high-quality data sets and fine-tuning specific tasks using minimal supervised data sets post-training.

As for the future of LLMs…well, it looks bright! Expect enhanced capabilities, audiovisual training methodologies using video & audio inputs & a wave of transformation in workplace dynamics!

Oh! Let’s not forget about AWS stepping into the arena to aid LLM developers. With services like Amazon Bedrock & SageMaker JumpStart offering tools to build & scale generative AI applications swiftly – AWS is indeed a haven for LLM enthusiasts!

Curious to explore more about Transformer LLMs and curious concoctions? Stay tuned for more enticing insights coming your way! Keep reading to discover fascinating nuances about Large Language Models on AWS free tier. Trust me; you don’t want to miss out on this linguistic adventure!

Table of Contents

ToggleThe Importance of Large Language Models

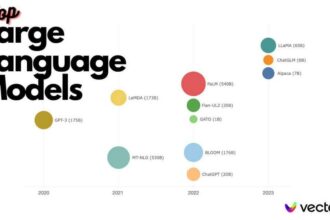

Large Language Models (LLMs) are becoming bigger and better (pun intended!). From predicting single words to entire documents, these language wizards have come a long way. With advancements in computer memory, dataset sizes, processing power, and modeling techniques for longer text sequences, the capabilities of modern LLMs have skyrocketed.

But what exactly defines ‘large’ in the realm of Large Language Models? It’s quite fuzzy, but we’re talking about models like BERT with 110 million parameters or PaLM 2 boasting up to a whopping 340 billion parameters. That’s not just large; it’s colossal!

So, what makes these Large Language Models so important? Well, imagine having one model that can ace tasks like answering questions, summarizing documents, translating languages, and completing sentences. These linguistic giants have the potential to shake up content creation, search engine usage, and even how we interact with virtual assistants.

Now that you know why they matter let’s dive into the nitty-gritty of what makes Large Language Models tick. These models are essentially trained on vast amounts of text data using deep learning algorithms to grasp the intricacies of natural language. They can tackle various language tasks with ease: think translating languages, analyzing sentiments, engaging in chatbot conversations—the works! They’re like the Swiss Army knives of the AI world—versatile and handy in multiple scenarios.

Fun Fact: LLMs can wrap their digital heads around complex textual data by identifying entities and understanding relationships between them. And hey presto! They can cook up new text that’s not only coherent but also grammatically spot-on—a dream come true for sentiment analysis aficionados.

But hold onto your neural networks; it doesn’t stop there! Large Language Models are incredibly flexible creatures capable of considering billions of parameters and catering to a myriad of uses. Picture this: they can assist in copywriting magic (imagine Claude spinning captivating tales), knowledge base digging (extracting precious nuggets from digital archives), or even coding wizardry (yes, they can write code too!). The possibilities are as vast as their parameter count!

As large as LLMs may be with their neural network muscles flexed to the max – remember that these models undergo meticulous training involving massive neural networks and post-training tweaks for specific tasks using minimal supervised data sets.

And what about the future? Well buckle up because the ride ahead looks thrilling! Expect even more enhanced capabilities from LLMs—think audiovisual training methodologies using video and audio inputs making them masters across various domains!

AWS has also jumped into the ring recently helping developers explore the capabilities of LLMs through services like Amazon Bedrock & SageMaker JumpStart for building generative AI applications swiftly – truly proving that AWS is paving its way into being an AI haven!

So there you have it- A glimpse into why Large Language Models matter and how they’re shaping our technological landscape one word at a time. Ready to explore more about Transformer LLMs’ world? Stay tuned for more intriguing insights coming your way soon! Trust me; this linguistic adventure is just getting started!

Transformer vs. Recurrent Neural Networks

Transformer LLMs have taken the AI world by storm, offering a fresh take on how sequential data is processed. Let’s delve into a comparison between Transformers and Recurrent Neural Networks to unravel their unique strengths and differences.

Recurrent Neural Networks (RNNs) are like the old guard of sequential data processing, designed to handle information flow in a loop-like manner, enabling them to retain memory of past inputs. However, RNNs often face challenges with vanishing or exploding gradients as sequences increase in length, complicating their ability to grasp long-term dependencies effectively.

Enter Transformers – the new kids on the block shaking up the game! These models differ from RNNs significantly in their approach to processing sequences. While RNNs process data sequentially, which can lead to gradient-related hurdles over time, Transformers excel at parallel processing. This means they can efficiently handle both short and long sequences like a charm without breaking a sweat over vanishing or exploding gradients along the way.

Did you know?: The Transformer architecture has caused quite a stir in natural language processing ever since its debut back in 2017. With advancements like DETR overshadowing older architectures like Faster-RCNN, Transformers offer simplicity in design, streamlined networks with less parameters (yay for efficiency!), higher throughput rates, and quicker training times. It’s like upgrading from an old jalopy to a sleek sports car!

If we compare Transformers specifically with Recurrent Convolutional Neural Networks (RCNN), we witness how versatile Transformer models seem to be stealing the show with their nimble performance across various tasks in NLP and beyond.

Seems like Transformers have truly changed the game when it comes to handling sequential data efficiently!

As we marvel at the feats of these cutting-edge models paving the way for smarter AI applications, it’s essential to recognize how each architecture brings its unique flavor to the mix. Whether it’s capturing long-term dependencies without breaking a sweat or speeding through parallel processing tasks effortlessly – both RNNs and Transformers play crucial roles in shaping the future of AI technology.

Now that you’ve glimpsed into this exciting clash between Transformer LLMs and traditional Recurrent Neural Networks get ready for more captivating revelations about these linguistic prodigies as we journey deeper into understanding Large Language Models’ intricacies on AWS free tier! Trust me; this adventure is only getting started!

Excited for more insights? Stay tuned for an immersive dive into Transformers vs Deep Learning Revealed—grab your linguistic surfboards; we’re riding high tides of knowledge ahead!

Training Transformer LLMs: Methods and Challenges

Large Language Models (LLMs) are like the Swiss Army knives of the AI world, versatile enough to handle tasks ranging from answering questions to summarizing documents and even generating text. These linguistic giants, powered by their transformer model architecture, excel at understanding and producing human-like text.

So, what can you actually use these LLMs for? Well, imagine having an AI assistant that can churn out compelling copy for your website or draft code snippets in multiple programming languages with ease. Picture them effortlessly tackling sentiment analysis in customer reviews or swiftly categorizing documents based on topics—these models are true jack-of-all-trades!

But wait, there’s more! LLMs can also dabble in language translation, completing sentences with finesse, or even wading into the realms of creating engaging chatbot conversations. The possibilities are as vast as the data they’re trained on!

Fun Fact: Did you know that LLMs undergo a rigorous training process involving self-supervised learning, supervised learning, and reinforcement learning stages? Each step plays a crucial role in shaping these models into the intelligent text wizards they are!

Now imagine unleashing these linguistic wizards into various domains like multimedia tasks (multimodal), handling multiple languages (multilingual), focusing on specific areas like medical or legal jargon (domain-specific), or simply being ready for any task thrown their way (general-purpose). These adaptations highlight just how flexible and powerful LLMs can be based on the job at hand.

So dear reader, the next time you think about reaching for that virtual pen to write compelling content or ponder over complex translations across languages — remember that having a trusty Large Language Model by your side might just be the magical solution you’ve been looking for!Excited to explore how these language whizzes can transform your tasks? Stay tuned for more thrilling insights coming your way soon! You’re about to unlock a whole new world of possibilities with LLMs’ capabilities—get ready to be amazed!

- Large Language Models (LLMs) are massive deep learning models pre-trained on vast amounts of data, equipped with powerful Transformers for deciphering relationships between words and phrases.

- Transformers process sequences in parallel, speeding up training and enabling efficient handling of massive datasets using GPUs.

- LLMs are incredibly versatile, capable of answering questions, summarizing documents, translating languages, completing sentences, and revolutionizing technology interactions.

- The key to LLMs’ functionality lies in word representations using multi-dimensional vectors (word embeddings) to preprocess text into numerical forms for understanding contextual meanings and relationships between words.

- Practical applications of Large Language Models include copywriting assistance, knowledge base answering, text classification for sentiment analysis or document categorization, code generation in various programming languages, and text generation.